The question of “is my Linux system has enough memory?” sounds easy on the surface. It’s surprisingly cumbersome to answer when you dig deeper. Yup, free -m is not even a half of the story and there are all kinds of signs of memory pressure in Linux.

First of all there’s vm.overcommit_memory kernel setting which defines the effective overcommit policy. Similarly to a bank Linux system by default allows processes to “borrow” way more than the actual available memory – even considering swap. And as with banks it all works fine while there’s enough actual “cash”. Other possibility for vm.overcommit_memory is either “always overcommit” or “never overcommit” which turns out to be much less useful in practice.

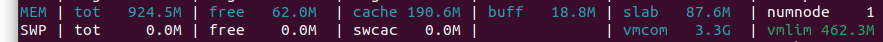

We may easily have a picture like this:

Here we have ~900 MB of RAM, no swap but virtual committed memory of 3.3 GB – x3.6 of the actual memory the system has. So is it ok or not? Well, it depends on how the system behaves while handling its workload. Let’s take a look at some signs of memory pressure in Linux.

OOM kills belongs to signs of memory pressure in Linux

OOM killer is a system feature – there’s both kernel space and user space flavors these days – which aims to help deal with memory pressure. The idea is pretty simple: if we don’t have enough memory let’s kill some process to free it up. The downside of this is that you may lose to OOM killer some critical process to your workload. Sidestepping of this issue requires fine tuning OOM score for such critical processes to decrease probability that they may become OOM killer targets.

One of the ways to know how much of kernel space OOM killer activity happens on the system is as easy as sudo dmesg -T | grep "Killed process". If you see a lot of output for that then you definitely have memory pressure handled by OOM killer.

CPU pressure as a consequence of memory pressure

There’s an old article in my blog about the primary case – kswapd activity. Besides moving memory pages between main memory and swap kswapd also involved in handling page faults. Page fault is a case when a memory page is accessed by a process but it’s not there in the main memory. Linux then loads the page into the memory and resumes the process. Under high pressure this may end up with pages being constantly loaded/unloaded per processes’ requests. This state of the system wasting too much time on memory management is known as “thrashing”. Thrashing manifests itself in high CPU usage.

CPU pressure is especially problematic when caused by memory pressure as it usually renders the system very unresponsive. Because of that it’s also hard to troubleshoot as typically you just see CPU spiking via monitoring tools. Getting into terminal on the box usually either doesn’t work or too slow to do anything, so it’s only feasible to reboot.

More of signs of memory pressure in Linux – I/O

A system trying to deal with memory pressure may also bump into I/O as the bottleneck. It can be too much disk swapping – if swap is available. Or it may be just too much disk reads upon page faults. That’s especially true for AWS EBS bursting feature – if bursting balance is bust I/O will degrade even further grinding the system to a halt.

What else may break because of memory pressure?

As the system gets unstable all hell breaks loose. One of the interesting cases I had was network getting down under memory pressure. How so? Well, it’s swiss cheese model of a disaster in action. Due to memory pressure and lack of swap the system ends up with CPU pressure. By being slow due to CPU pressure it fails to renew a DHCP lease in time. Route table is dropped taking down any connectivity. It’s pretty easy to recover when CPU pressure is gone. You need other ways to run commands on the box besides SSH, though.

What to do about it?

There’s more or less recent Linux feature called Pressure Stall Information (PSI) which lays a solid foundation for troubleshooting any kind of pressure.

It’s not easy to use as is, though, so I found atop to be especially helpful to troubleshoot these kind of issues. By leveraging process accounting Linux facility in a combination with PSI and time traveling (by taking periodic snapshots) atop makes troubleshooting a way better experience.